I’m using the K-means clustering algorithm to create a recommendation engine for an e-commerce application. As a result, I needed the Scikit-learn and pandas libraries, which increased the size of my deployment package beyond the AWS lambda package limits.

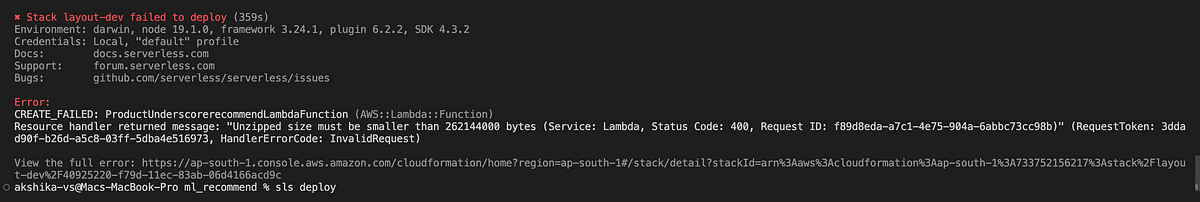

During the deployment of my stack, I got this error:

Deployment Package Limits

When developing a serverless application, you should keep some soft and hard limits in mind for each AWS service. These restrictions are intended to protect both the customer and the provider from unintentional use. In addition, guardrails are provided to ensure that best practices are followed.

AWS Lambda has a hard limit of 50MB for compressed deployment packages and a hard limit of 250MB for uncompressed deployment packages.

How to deal with size limits when we are using libraries like scikit-learn, pandas etc.?

To help with managing potentially large dependencies, functionality for compressing libraries is available (for example pandas, numpy, scipy, and scikit-learn). To decompress them, you’ll need to make some changes to your code.

Firstly add the following configuration to your serverless.yml file.

custom: pythonRequirements: zip: trueThen add this piece of code to the beginning of our handler module in your handler.py file.

try: import unzip_requirements except ImportError: pass

Note: Handler function in handler.py is the entry point of any lambda function. It is the first function that is executed when your lambda function is invoked.

These modifications in your code resolve your issue of the package size limit being exceeded.

A Lambda Layer is another option for dealing with large dependencies.

For this include the layer option in your configuration in the serverless.yml file.

custom: pythonRequirements: layer: trueA layer will be automatically produced after the requirements are compressed into a zip file. The reference must be added to the functions that will use the layer.

Add this reference to the function defined in the serverless.yml file.

functions: myfunction: handler: handler.myfunctions layers: – Ref: PythonRequirementsLambdaLayerYou can also add this custom configuration into your layer in the serveless.yml file :

custom: pythonRequirements: layer: name: ${self:provider.stage}-layerName description: lambda layer for python requirements compatibleRuntimes: – python3.8 licenseInfo: GPLv3 allowedAccounts: – ‘*’Key Note: We can also use theImage package type, which has a much larger file size limitation but it requires more configuration as compared to above mentioned methods.

We all know that when we build a machine learning model the algorithms that we use consist of high package libraries. So, to overcome the lambda package size limit while deploying these models we can use the above approaches.

Keep Learning Keep Growing 🥂.